Full Traceability Across Agent Hand‑offs

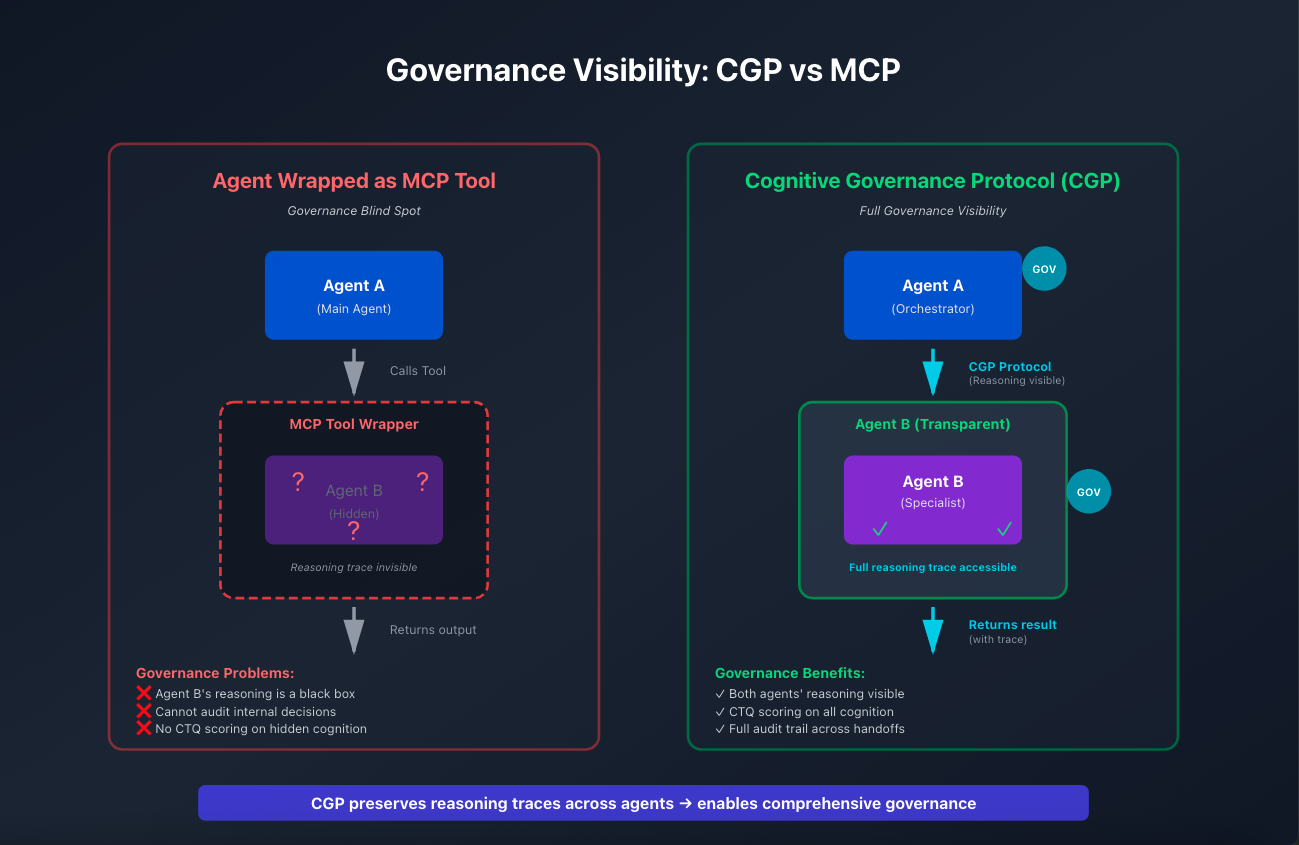

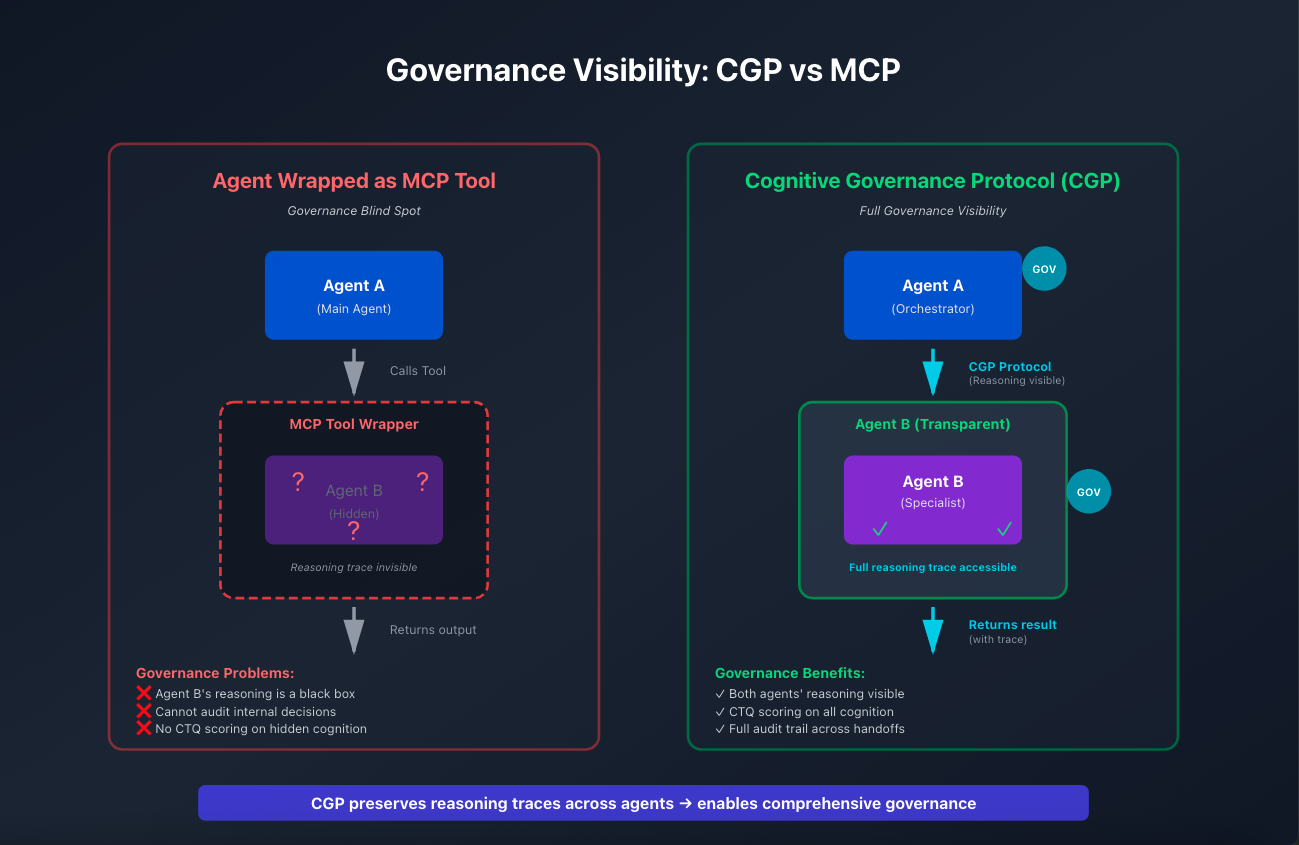

Why an agent-to-agent Protocol instead wrapping agents as tools? Wrapping agents as tools creates black‑box blind spots. CGP protocol preserves reasoning traces end‑to‑end.

An open standard for AI governance and reasoning transparency that enables real-time oversight of autonomous AI systems. MeaningStack's cognitive governance is built on CGP—a protocol the community can inspect, improve, and build upon.

Our protocol operates in real-time, providing comprehensive oversight without disrupting operational efficiency.

Steward Agents monitor AI reasoning traces in real-time, capturing the complete decision-making process without adding latency to operational workflows. Every reasoning step is logged for analysis.

Each reasoning trace is assessed against Governance Blueprints using our scoring framework. The system identifies policy violations, ethical concerns, incomplete reasoning, and quality issues automatically.

When concerning patterns are detected, human operators receive immediate notifications with complete context. Alerts include severity scores, policy violations, and full reasoning traces for informed decisions.

Authorized operators can halt agent execution, modify reasoning parameters, or escalate decisions through the Human Dashboard. All interventions are recorded with complete audit trails for compliance.

Every observation, evaluation, and intervention is recorded in the immutable Governance Ledger. This creates comprehensive compliance documentation automatically, meeting regulatory audit requirements.

Historical patterns and operator interventions inform continuous improvement of Governance Blueprints. The system learns from past decisions to enhance oversight effectiveness over time.

We believe trustworthy AI requires more than proprietary "black boxes"—it requires open specifications that anyone can verify, audit, and improve.

Most AI governance solutions are proprietary systems where you must trust vendor claims without verification. CGP provides open specifications with complete visibility into how governance actually works.

You're not locked into a single vendor's ecosystem. CGP is vendor-neutral—no single company controls the standard. Build your own implementation or use CGP-compatible tools.

The community can contribute improvements and domain-specific extensions. Like HTTP, SMTP, and TCP/IP transformed technology, CGP brings open protocol benefits to AI governance.

CGP enables governance without exposing sensitive data. Organizations maintain control over their data while meeting compliance requirements through standardized oversight mechanisms.

Why an agent-to-agent Protocol instead wrapping agents as tools? Wrapping agents as tools creates black‑box blind spots. CGP protocol preserves reasoning traces end‑to‑end.

CGP provides comprehensive standards for AI governance across the entire oversight lifecycle.

Structured formats for cognitive traces—how AI agents document their reasoning steps, decision points, and quality indicators in machine-readable formats.

Standardized metrics for evaluating reasoning completeness, consistency, compliance, and bias indicators—enabling objective assessment of AI decision quality.

Declarative languages for expressing governance policies—defining conditions, constraints, and actions in portable, human-editable formats that work across systems.

Immutable logging standards that capture who made what decision, what reasoning led to it, when and where it occurred, and what policies were applied.

Escalation and oversight interfaces that define how humans receive alerts, review context, and intervene in AI operations while maintaining complete audit trails.

Standards for cross-system compatibility—ensuring governance artifacts remain interpretable across organizations, platforms, and AI frameworks.

A systematic approach to implementing cognitive governance in your AI infrastructure.

Steward Agents integrate with existing AI infrastructure through standardized APIs. They observe agent reasoning without disrupting workflows or adding operational latency. The deployment is model-agnostic and works with any LLM provider.

Configure organizational policies, regulatory requirements, and ethical standards into machine-readable governance frameworks. Blueprints are portable, versionable, and can be updated at runtime without system rebuilds.

Steward Agents continuously evaluate reasoning quality against Governance Blueprints. They score decisions, identify concerning patterns, and maintain complete reasoning visibility across all operational AI agents.

When Stewards detect concerning reasoning, human operators receive immediate alerts with complete context through the Human Dashboard. Operators can halt execution, modify parameters, or escalate—maintaining meaningful control.

Every decision, evaluation, and intervention is recorded in the immutable Governance Ledger. This creates complete compliance documentation automatically, satisfying EU AI Act Article 14 requirements for human oversight.

The MeaningStack protocol is built on fundamental principles that ensure effective governance at scale.

Steward observation happens in parallel with operational AI execution. Governance never blocks or slows down production systems, ensuring business continuity.

Works with any LLM provider or agent framework through standardized APIs. Organizations retain flexibility to change models without rebuilding governance infrastructure.

Humans remain in meaningful control through intelligent alert systems and intervention mechanisms. The protocol amplifies human judgment rather than replacing it.

Built specifically to meet EU AI Act Article 14 requirements. Every aspect of the protocol creates compliance documentation automatically without additional overhead.

We're collaborating with AI Safety Camp to empirically test whether governance can function as portable, participatory infrastructure for multi-agent AI systems.

Testing whether the Cognitive Governance Protocol (CGP)—built on CGP—can enable safe multi-agent collaboration through portable governance artifacts, real-time oversight, and community-driven standards.

Open-source SDK implementing CGP primitives, standardized Governance Blueprint schemas, Steward Agent prototypes for reasoning oversight, and cross-framework compatibility testing with LangChain, CrewAI, and AutoGen.

Can governance artifacts transfer across contexts? Can communities iteratively refine them? Do they provide real safety value? Can agents from different organizations verify governance compatibility before collaboration?

We're seeking protocol engineers, schema developers, AI safety researchers, and framework developers to help translate CGP from concept to functional open protocol. All work is community-driven with results released openly.

CGP SDK v0.1, Governance Blueprint schema library, Steward Agent prototype, empirical study report on governance portability, and design guidelines for participatory governance infrastructure.

Demonstrating that AI governance can function as open, portable, and participatory infrastructure—shifting AI safety from private compliance to collective stewardship and establishing foundations for democratic oversight of distributed intelligence.